Measuring and monitoring of network performance is required for two important reasons. The first is to provide the tools necessary to view the network performance from a Grid applications standpoint and hence identify any strategic issues which may arise (such as bottlenecks, points of unreliability, Quality of Service needs). The second is to provide the metrics required for use by Grid resource broker services. This document outlines the requirements for network monitoring. The classical metrics associated with network monitoring are then described and existing monitoring methods and associated tools are cataloged The architectural design of the monitoring system is presented. It comprises four functional units, namely, monitoring tools or sensors; a repository for collected data; the means of analysis of that data to generate network metrics; and the means to access and to use the derived metrics. . It describes the tools that have been selected for this prototype release and how they provide metric information to both the Grid middle-ware and, via visualization, to a human observer.

Network Monitoring Tools

Network monitoring tools are used to calculate network metrics that characterize a network. These will be used by the Grid middle-ware to optimize the performance of Grid applications; they will also be used by network research and developers, and network support personnel to maintain and manage the network upon which the operation of the Grid depends.

Network monitoring is used in two distinct ways with respect to the Grid and together these describe the aims for network monitoring.

- Firstly and foremost network monitoring will be used by the Grid applications to optimise their usage of the networks that comprise the Grid. Of primary importance will be the publication of the metrics that describe the current and future behaviour of the network to the Grid middleware such that the Grid applications are able to adjust their behaviour to make best use of this resource.

- Secondly, it will be used to provide background measurements of network performance which will be of value to network managers and those tasked with the provision of network services for Grid applications.

A network performance tool for the Grid environment, Gloperf [R30], has been designed as a part of the Globus computing toolkit but is not currently available in the Globus version running in the EDG Testbed1. For that reason, WP7 has developed a network monitoring architecture for the Grid.

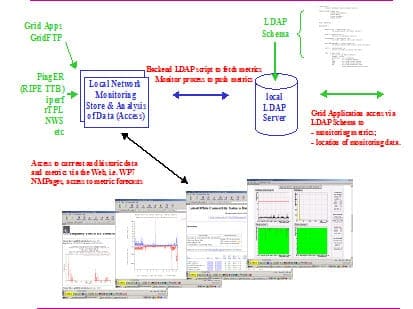

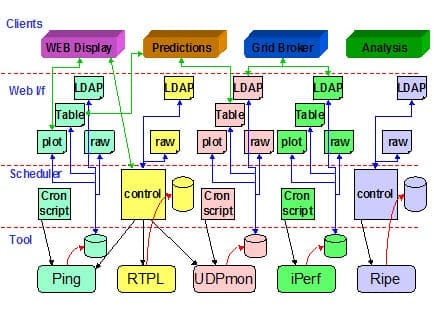

Figure 1, shows in outline the architecture for network monitoring. It comprises four functional units, namely, monitoring tools or sensors; a repository for collected data; the means of analysis of that data to generate network metrics; and the means to access and to use the derived metrics.

Grid applications are able to access network monitoring metrics via LDAP services according to a defined LDAP schema. The LDAP service itself gathers and maintains the network monitoring metric data via back-end scripts that fetch, or have pushed, the current metric information from the local network monitoring data store. Independently, a set of network monitoring tools are run from the site to collect the data which describes the local view of network access to other sites in the Grid. This data is stored in the network monitoring data store which is here modeled as a single entity. A set of scripts associated with each monitoring tool is available to provide web based access for viewing and analyzing the related network metrics. This architecture allows additional monitoring tools to be easily added with the only requirement being that the means for analysis and visualization of the data and, either a push mechanism to update local LDAP server, or, a back-end script to allow LDAP server access to specific metrics, are provided.

Network Monitoring Tools: Active

Basic Tools

The well known tools – traceroute, pathchar, netperf, etc. – will be used to provide the measurement of the basic metrics.

Many network monitoring tools make use of ICMP, a layer 3 protocol, which may be subject to different traffic management strategies from TCP- or UDP- based traffic (layer 4 protocols). For example, under congestion conditions ICMP traffic will often be preferentially dropped or dealt with at a reduced priority. However work has been published comparing the use of Ping and TCP Syn/Ack packet exchanges to characterise a specified network route. The results were broadly equivalent which suggests that to a first approximation the use of ICMP based tools provide reliable measurements.

PingER

The use within the HEP community of PingER is well established. It is used to measure the response time (round trip time in milli-seconds (ms)), the packet loss percentages, the variability of the response time both short term (time scale of seconds) and longer, and the lack of reachability, i.e. no response for a succession of pings. This is described in detail at [R9].

PingER data is stored locally to allow Web-based access to provide an analysis of packet loss, RTT and the frequency distribution of RTT measurements in both graphical and tabular format. The data is also collected centrally to allow site-by-month or site-by-day history tables for all (or selected) sites as seen from the local monitor point.

The PingER project has a well established infrastructure involving hundreds of sites in many countries all over the world and is particularly focused on the HEP/ESnet communities.

There is clearly a danger that through ICMP rate limiting or ping discard policy that this approach will either give invalid results or no results at all. This is well recognised but to date comparison between PingER and Surveyor and the RIPE NCC TTM box [R10] suggest that the concerns are unfounded. However this offers no guarantees in the future.

RTPL

RTPL (Remote Throughput Ping Load) package is used for periodic network performance measurement tests between a set of locations to view the network performance from a user’s perspective. The performance measurements consist of round-trip and throughput measurements between the locations. By default all pairs in the location set are used, but it is also possible to select the location pairs for the tests. In addition, the load on the participating monitoring systems is measured so that any performance change can be related to the particular machine load. Because of the aim to provide the network performance measurement from the user viewpoint and not as the maximum possible capacity of a network, the measurement parameters are configured to default values and the test duration’s are limited. Various long-term statistics would otherwise blur the short-time fluctuations.

Measurements are performed by the control host. The control host starts the network performance measurements at each of the participating locations via a secure remote shell command with the results of the measurements being returned using a similar mechanism. The following network performance measurements are made,

Throughput. As defined in R[4], “The maximum rate at which none of the offered frames are dropped by the device”. It is a way to quantify the traffic flow which can be handled by a network connection. The throughput is measured with the public domain command netperf.

Round-trip. The round-trip time quantifies the response offered by a network connection. It will be measured, before the throughput, across the same connections as the throughput. The round-trip time is measured with the system command ping.

Load. This is expressed here as the number of fully active processes at a host. It is not a network parameter, but it may help to explain unexpected performance decreases. The load is measured at the current host using the system command uptime.

The presentation of the results is Web based using a Java Applet to load the data from the files into the memory of the Web browser from a user analysing the results.

RTPL is described in detail at [R11]

Iperf and Throughput Measurements – IperfER

Iperf [R16] is a tool to measure maximum TCP bandwidth, allowing the tuning of various parameters and UDP characteristics. It reports bandwidth, delay jitter and datagram loss. Iperf is widely used however [R17] describes the results of using iperf between several HEP institutes and therefore provides a good example of its usage.

IperfER has been developed based upon the PingER software but replacing the RTT and packet loss measurement based upon ping with TCP throughput measurements making use of the iperf tool. The graphical output from iperfer is very similar to pinger, and the throughput metrics are made available to the middleware via LDAP in a manner consistent with pinger.

UDPMon

UDPmon gives an estimate of the maximum usable bandwidth between two end nodes, a measurement of the packet loss, and the packet jitter or variation in the arrival times between consecutive packets. This packet jitter is an estimator of the variations in the one-way latencies of the packets traversing the network, as defined in [R4]. UPDMon has been developed by Richard Hughes-Jones (PPARC) within WP7 and, as there exists no specific reference describing its operation, a short description is provided here.

UDPmon uses two programs, a listener called udp_bw_resp that receives the incoming test data and a monitor program called udp_bw_mon that performs the test to the remote host.

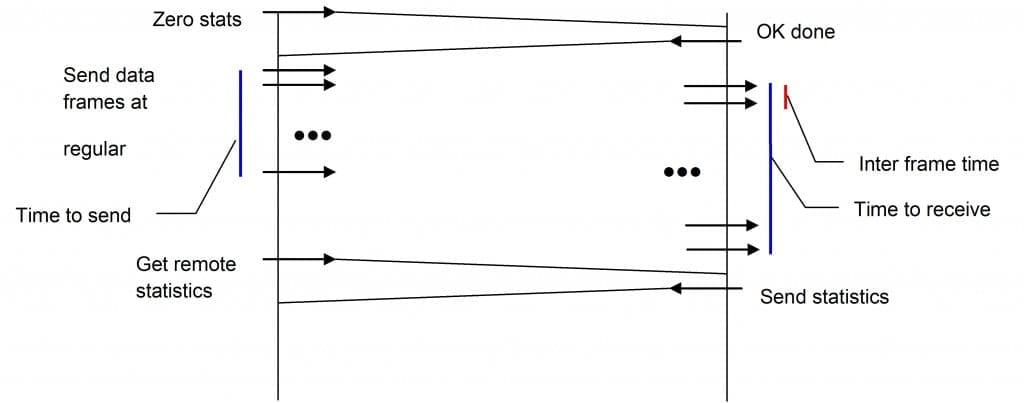

The test uses UDP/IP frames with the application protocol shown in Figure 3. The test starts with the Requesting node sending a “Clear Statistics” message to the Responder. On reception of the OK acknowledgement, the Requesting node sends a series of “data” packets separated with a given fixed time interval between the packets. At the end of the test, the Requesting node asks for the statistics collected by the Responding node. Packet loss for the control messages are handled by suitable time-outs and re-tries in the Requesting node. If a test is already in progress when a “Clear Statistics” message arrives at a Responder, the Requestor is sent a “Please Defer” message. The defer procedure prevents multiple concurrent tests from distorting the throughput measurements.

The transmit throughput is found using the amount of data sent and the time taken; the receive throughput is calculated from the amount of data received and the time from the first data packet to the last packet received.

Packet loss is measured by the remote node by checking that sequence numbers in the packets increase correctly, this also detects out-of-order packets. The number of packets seen, the number missed as indicated the sequence number check, and the number out-of-order are reported at the end of each test.

The Responding node also measures the time between the arrival of successive packets and produces a histogram of these times. This histogram may be requested by the Requesting node at the end of the test. Queue lengths in the network may be investigated by comparing the extra time taken to receive the burst of packets. [Remember that the available bandwidth and packet loss may be different from node a->b and node b->a and he socket buffer sizes, IP precedence bits, and IP tos bits may be set using suitable command line switches.]

The UDPmon tool has been fully integrated into the WP7 monitoring architecture and is provided with Perl scripts for scheduling the tests, generating historical time sequence plots and tables. An LDAP backend script has been provided so that the current snapshot may be obtained for publication to the middleware.

MapCenter

MapCenter [R20] has been created to display a flexible presentation layer of services and applications on a Grid. Current monitoring technologies have great functionalities and store different and accurate results in “Information System” of Grids, but generally, there is no efficient ways to graphically represent all communities, organization, applications running over Grids. MapCenter has been designed to fill this gap.

MapCenter polls computing elements (objects) with different methods, for example, to send ICMP requests (ping) to check the connectivity of computing elements; to make TCP connections to designated ports to check services running on computing elements; and will be able to make request to Information Systems of Grids to check specific grid services availability. MapCenter is able to display various views of grids, for example, via graphical maps; with logical views of services; and with full tree of computing elements.

MapCenter is really focused on the presentation layer in a huge and heterogeneous environment, as in a Grid context. It offers a very flexible and simple model that enables representation of any level of abstraction (national and international organizations, virtual organizations, application etc) needed by such environments. In principle MapCenter can be extended to monitor and present other metrics.

MapCenter has been developed by Franck Bonnassieux (CNRS) within WP7.

RIPE NCC Test Traffic Measurements

The goal of the Test Traffic project is to provide independent measurements of connectivity parameters, such as delays and routing-vectors, in the Internet. The project implements the metrics discussed in the IETF IPPM working group. Work on this project started in April 1997 and over the last years, it has been shown that the set-up is capable of routinely measuring delays, losses and routing vectors on a large scale. The Test Traffic project is being moved to a service offered by RIPE NCC to the entire community. This work is described in [R12]

Surveyor

Surveyor [R13] is a measurement infrastructure that is being currently deployed at participating sites around the world. It is based on standards work being done in the IETF IPPM WG. Surveyor measures the performance of the Internet paths among participating organisations. The project is also developing methodologies and tools to analyse the performance data.

One-way delay and packet loss are measured for each of the paths by sending time stamped test packets from one end of the specified path to the other. The one-way delay is computed by subtracting the timestamp in the packet from the time of arrival at the destination machine.

NIMI

The National Internet Measurement Infrastructure (NIMI) is a project, begun by the US National Science Foundation and currently funded by DARPA, to measure the global internet. It is based on a Network Probe Daemon and was designed to be scalable and dynamic. NIMI is scalable in that NIMI probes can be delegated to administration managers for configuration information and measurement co-ordination. It is dynamic in that the measurement tools are external as third party packages that can be added as needed. Full details can be found at [R28] together with a discussion of associated issues at [R29].

MRTG

The Multi Router Traffic Grapher (MRTG) [R14] is a passive tool to monitor the traffic load on network-links. MRTG generates HTML pages containing images which provide a live visual representation of this traffic. MRTG consists of a Perl script which uses SNMP to read traffic counters of routers, logs the traffic data and creates graphs representing the traffic on the monitored network connection. These graphs are embedded into web pages which can be viewed from any modern Web-browser.

TracePing

Traceping [R15] uses packet loss as its metric of network quality. It has been found, in every case investigated, that the variations in packet loss recorded by traceroute and ping, and hence by Traceping, reflect changes in the real performance experienced at the user level. No measurements or estimates are made of either the systematic or random errors on the packet loss data. The numbers should simply be interpreted as qualitative indicators of the state of a network connection: the higher the numbers the lower the quality. Currently traceping is a VMS specific tools so its value is strictly limited although there is a proposal to make it more generally applicable.

Netflow and cflowd

Cflowd [R18] has been developed to collect and analyse the information available from NetFlow flow-export. It allows the user to store the information and enables several views of the data. It produces port matrices, AS matrices, network matrices and pure flow structures. The amount of data stored depends on the configuration of cflowd and varies from a few hundred Kbytes to hundreds of Mbytes in one day per router.

Network Weather Service

The NWS [R19] is a distributed system that periodically monitors and dynamically forecasts the performance that various network and computational resources can deliver over a given time interval. The service operates a distributed set of performance sensors (network monitors, CPU monitors, etc.) from which it gathers readings of the instantaneous conditions. It then uses numerical models to generate forecasts of what the conditions will be for a given time frame. The functionality is intended to be analogous to weather forecasting, and as such, the system inherits its name.

The NWS provides an inclusive environment to which additional sensors may be added. While it may be seen and used merely as a means of providing forecast data based upon current metric measurement, in reality it can provides a complete, self-contained, monitoring environment.

NetSaint

NetSaint [R26] is a program that will monitor hosts and services on a network. It has the ability to email or to page when a problem arises and when it gets resolved. NetSaint is written in C and is designed to run under Linux, although it should work under most other Unix variants. It can run either as a normal process or as a daemon, intermittently running checks on various services that are specified. The actual service checks are performed by external “plugins” which return service information to NetSaint. Several CGI programs are included with NetSaint in order to allow the current service status, history, etc. to be viewed via a web browser.

Network Monitoring Tools: Passive

The Grid Applications

Grid applications are expected to record the throughput data experienced in their normal operation and to make it available along with the data collected by other network monitoring tools. Already Grid ftp has the necessary capability to record such information on each transfer. This information will form a major component of the network monitoring effort recorded by the Grid but the information it provides will need to be carefully interpreted and compared with the results from active monitoring of the Grid.

Per flow application throughput with GridFTP

GridFTP is an extension of the standard FTP mechanism for use in a Grid environment. It is envisaged that it will be used as a standard protocol for data transfer. Per flow application throughput defines the throughput measured for a specific GridFTP transfer between specified end-points. It will be based on a ‘passive’ measurement of the data transferred – i.e. only the information transferred – not additional (test) data. The data identified to be stored regarding a GridFTP transfer is as follows,

- Source

- Destination

- Total Bytes / Filesize

- Number of Streams Used

- TCP buffer size

- Aggregate Bandwidth

- Transfer Rate (Mbytes/sec)

- Time Transfer Started

- Time Transfer Ended

A schema has been proposed [R27] that utilises a patched version of GridFTP in which transfers are recorded and summary data stored. This is not incorporated here as debate is continuing as to exact format. However, it is hoped that once a standard form of network monitoring schema is formed, both schemes will be unified.

Future releases of GridFTP propose to incorporate features such as automatic negotiation of TCP buffer/window sizes and parallel data transfer, and reliable data transfer

Grid volume

To supply a measurement of the volume of data transferred on the grid as a function of time (daily/weekly/monthly/yearly), it has been proposed that information from data transfer across the grid be aggregated as transfers proceed. Should GridFTP be used for data transfer, then the various variables shall be obtained from GridFTP measurements and simply added, otherwise the software used for transfers should take account of this requirement. This may or may not include data transferred from active (test) data.

An alternate approach is to infer Grid volume from the counters available in network devices via for example SNMP. This requires the identification of Grid traffic from the set of traffic carried by the network devices and will require further investigation.

Realization of the network monitoring architecture

The separate components that are required by the network monitoring architecture have been developed and demonstrated. The WP7 network monitoring testbed sites have been used to demonstrate the operation of a variety of network monitoring tools and their ability to collect data and to make the network monitoring metrics available via a Web interface. Separately scripts have been demonstrated that extract the network metrics from the network monitor data store and make them available via an LDAP service. The combined capability was demonstrated in the release that has been installed in the DataGrid testbed1. In that release the metrics of round trip time and packet loss have been made available.

This prototype deliverable extends to include the publication of RTT, packet loss and both TCP and UDP throughput via both LDAP services and the Web. In addition multiple tools have been provided that both measure and report on these same metrics. Within the LDAP schema a “default metric” measurement will be available, and also one specific to a particular monitoring tool. The purpose here is to demonstrate the extensibility of the architecture; to provide an easy means of validating output from a specific tool; and to provide a different “look and feel” to the visualization process. This architecture is shown in Figure 2 where the relationship between the components of the network monitoring deliverable can be seen.

Figure 2 : Diagrammatic representation of network monitoring architecture showing the components and their inter-relationship.

Network Monitoring Tools: Activities

In essence the results of monitoring activities may be separated on the basis of time. The immediate output from monitoring provides a snapshot of existing conditions within the network, whilst the historic data can be used to look back over days, weeks and months at the behavior of the network. These two uses of network monitoring are for very different purposes. It can be envisaged that the former will be used either directly by an end user wishing to optimize the run of a particular application or, more likely, by the application itself, via the middle-ware, to adjust, in real-time, its usage of network resources; while the latter will be used to manage the network, to review trends in usage and to ensure sufficient provision exists to meet demand.

Network Monitoring Tools: Active and Passive Network Monitoring

In order to obtain monitoring information about the network, tests need to be performed and these can be broadly classified into two categories.

Active network monitoring occurs where test data are run directly through the network in order to discover the properties of the end-to-end connection. The traffic generated by such testing is in addition to the usual traffic load on the network. Such an approach makes use of a variety of network monitoring tools and can be appropriately scheduled to minimise the impact to the users of networks whilst still providing an accurate measurement of a particular network metric.

Passive network monitoring makes use of real applications and their traffic to record the application experience of using the network. So for example Grid ftp can be used to record throughput of real Grid traffic across the network and similarly with other applications. This is beneficial in that no additional traffic is introduced across the network but in scheduling will reflect the users’ experience in performing some task and as such may not accurately record the capability of the network. In addition any maintenance issues relating to the monitoring aspects of the application are dependent for correction on maintainers of the Grid application itself.

The PM12 deliverable supports only active forms of monitoring where network metric information from the monitoring processes is made available in a form which allows it to be published to the middleware via an LDAP service. WP7 has worked closely with the Grid ftp developers to ensure that in the future such applications will record the appropriate information and, when available, will fit directly into the architectural model described here.

This initial implementation uses a query/response scheme to request network-monitoring information collated from logs and stored in an LDAP server. This is broadly a “pull mechanism” whereby the monitoring information is stored locally and periodically the LDAP service updates its information by fetching the network monitoring metrics. In the future consideration will also be given to some form of automatic streamed updates through a “push mechanism” where the monitoring tool periodically makes the network metric information available to the LDAP service.

There is concern over the feasibility of using the “push mechanism” in real GRID environments, as servers may be flooded with so much information they have little resources to do anything else. This has been identified as a topic for future work.